Strawberry: The Pain of General Large Language Model

This page is also available in: 中文

The field of Artificial Intelligence (AI) has made tremendous progress in recent years, especially with the development of large language models (LLMs), which have opened up new ways for us to communicate with machines. However, as these models are gradually integrated into everyday life and work applications, some fundamental and common-sense issues have exposed the limitations and challenges of the models.

According to online rumors, OpenAI is about to release a new type of large language model called "Strawberry." One of the most notable features of this model is its ability to "think" for 10-20 seconds, engage in self-reflection and self-checking, before providing an answer to complex questions. This is fundamentally different from the immediate response approach of existing LLM models, and seems to indicate a new direction in the development of AI technology.

1. Strawberry: A New Mode of Self-Reflection and Self-Checking

The currently released LLM models have been praised for their ability to quickly respond in most scenarios. These models, trained with advanced algorithms and large amounts of data, can rapidly understand and generate answers. However, when faced with certain complex or misleading common-sense questions, the models often provide laughable and erroneous answers. For example, in a classic example of "Which is bigger, 9.11 or 9.8?", most LLM models would simply compare the numerical values and conclude that the decimal part 11 is greater than 8, ignoring the decimal point.

One of the design intentions of Strawberry is to avoid such errors. When faced with similar questions, it engages in "thinking" and "reflection" before providing an answer, avoiding the misjudgments caused by mechanical reasoning. This is why Strawberry is rumored to adopt a different strategy: it takes extra time to simulate the "reflection" and "self-checking" processes similar to human thinking. This new mode of thinking means that it does not immediately respond to complex questions, but instead examines its own reasoning process, checking for logical errors or potential misleading factors, thereby improving the accuracy of its answers.

2. The Naming of Strawberry and Common-Sense Challenges

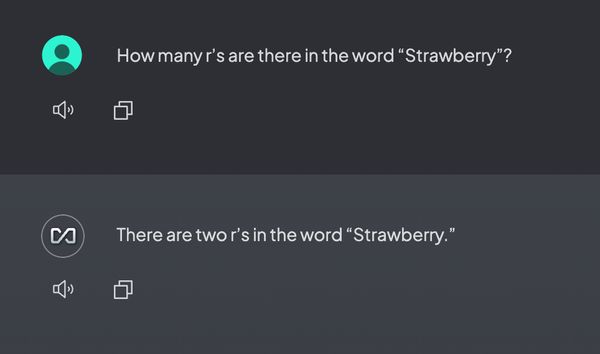

It is worth mentioning why OpenAI chose "Strawberry" as the name for this model. Although the official explanation has not been given, we can find clues from several famous LLM errors. In addition to the previously mentioned date comparison error, another interesting example is the question about the number of letters "r" in the English word "Strawberry." Surprisingly, many LLM models would answer that there are two "r" letters in the word.

This is because the models, when parsing and generating answers, do not analyze and reflect carefully, but hastily draw conclusions based on common vocabulary patterns. In fact, as long as the model can perform an additional counting check, it can obtain the correct answer. These unexpected errors not only highlight the pain points of large language models but also reveal the lack of reflection and self-checking.

3. Reflection and Checking: The Future of AI Reasoning?

The fundamental reason why these common-sense questions become the "Achilles' heel" of AI models lies in the lack of a mechanism for reflection and checking during the reasoning process. Most current LLM models are based on probability-driven language generation. Although they can make decisions quickly, they do not go through the "self-doubt" and "reconfirmation" steps like humans do. Therefore, when faced with logical or complex reasoning questions, the models may make seemingly reasonable but actually incorrect judgments.

Strawberry introduces an additional "thinking time" to perform self-checking before providing a rapid response, mimicking the careful thinking process of humans when answering questions. This mechanism not only aims to improve the accuracy of answers but also represents a new architecture for reasoning and decision-making. AI no longer relies solely on the simple calculations of probability models but attempts to validate the results before answer generation, thereby enhancing its ability to handle complex questions.

4. Embracing Challenges: The Significance of Strawberry

Whether it is the date comparison problem or the issue of the number of letters in the word "Strawberry," they are the "traps" that the most advanced AI models currently face. These problems expose not only technical challenges but also an important turning point in the future development of AI.

By naming the new model Strawberry, OpenAI seems to imply that they not only recognize this problem but also address these challenges through technological evolution. The emergence of Strawberry may become a new milestone in the development of AI models, representing not only an improvement in language understanding and generation capabilities but also an innovation in reasoning and decision-making, akin to human reflection.

Conclusion

As AI models are increasingly applied in daily life, the limits of their reasoning abilities are constantly being tested. The upcoming release of Strawberry by OpenAI seems to be a new attempt: breaking through the bottleneck of existing models in common-sense questions by introducing self-checking and self-reflection mechanisms. For the future of AI technology, Strawberry represents not only an enhancement of generation capabilities but also a revolution in reasoning methods, which is worth our anticipation.

This article was published on 2024-09-12 and last updated on 2024-09-23.

This article is copyrighted by torchtree.com and unauthorized reproduction is prohibited.